The Czochralski (CZ) method of growing silicon crystals is quite easy to visualize. Chunks of pure silicon with no particular crystallographic structure are melted at 1414°C in a graphite crucible. A small seed of silicon is then brought into contact with the surface of the melt to start crystallization. Molten silicon solidifi es at the interface between seed and melt as the seed is slowly withdrawn. A large ingot begins to grow both vertically and laterally with the atoms tending to arrange themselves in a perfect crystal lattice.

Unfortunately, this classic method of producing crystals has a number of disadvantages. Crystal growth is slow and energy intensive, leading to high production costs. Impurities may be introduced due to interaction between the melt and the crucible. And in the case of PV the aim is of course to

produce thin solar cell wafers rather than large ingots, so wire saws are used to cut the ingot into thin slices, a time consuming process that involves discarding valuable material. For these reasons the PV industry has spent a lot of R & D effort investigating alternatives, including pulling crystals in thin sheet or ribbon form, and some of these are now used in volume production. Whatever method is employed, the desired result is pure crystalline silicon with a simple and consistent atomic structure.

The element silicon has atomic number 14, meaning that each atom has 14 negatively charged electrons orbiting a positively charged nucleus, rather like a miniature solar system. Ten of the electrons are tightly bound to the nucleus and play no further part in the PV story, but the other four valence electrons are crucial and explain why each atom aligns itself with four immediate neighbours in the crystal. This is illustrated by Figure 2.4 (a). The ‘ glue ’ bonding two atoms together is two shared valence electrons, one from each atom. Since each atom has four valence electrons that are not tightly bound to its nucleus, a perfect lattice structure is formed when each atom forms bonds with its four nearest neighbours (which are actually at the vertices of a three - dimensional tetrahedron, but shown here in two dimensions for simplicity). The structure has profound implications for the fundamental physics of silicon solar cells.

Silicon in its pure state is referred to as an intrinsic semiconductor. It is neither an insulator like glass, nor a conductor like copper, but something in between. At low temperatures its valence electrons are tightly constrained by bonds, as in part (a) of the figure, and it acts as an insulator. But bonds can be broken if sufficiently jolted by an external source of energy such as heat or light, creating electrons that are free to migrate through the lattice. If we shine light on the crystal the tiny packets, or quanta, of light energy can produce broken bonds if sufficiently energetic. The silicon becomes a conductor, and the more bonds are broken the greater its conductivity.

Figure 2.4 (b) shows an electron ε1 that has broken free to wander through the lattice. It leaves behind a broken bond, indicated by a dotted line. The free electron carries a negative charge and, since the crystal remains electrically neutral, the broken bond must be left with a positive charge. In effect it is a positively charged particle, known as a hole. We see that breaking a bond has given rise to a pair of equal and opposite charged ‘ particles ’ , an electron and a hole. Not surprisingly they are referred to as an electron-hole pair.

At first sight the hole might appear to be an immovable object fixed in the crystal lattice. But now consider the electron ε2 shown in the figure, which has broken free from somewhere else in the lattice. It is quite likely to jump into the vacant spot left by the first electron, restoring the original broken bond, but leaving a new broken bond behind. In this way a broken bond, or hole, can also move through the crystal, but as a positive charge. It is analogous to a bubble moving in a liquid; as the liquid moves one way the bubble is seen travelling in the opposite direction.

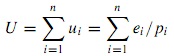

We see that the electrical properties of intrinsic silicon depend on the number of mobile electron–hole pairs in the crystal lattice. At low temperatures, in the dark, it is effectively an insulator. At higher temperatures, or in sunlight, it becomes a conductor. If we attach two contacts and apply an external voltage using a battery, current will flow – due to free electrons moving one way, holes the other. We have now reached an important stage in understanding how a silicon wafer can be turned into a practical solar cell.

Yet there is a vital missing link: remove the external voltage and the electrons and holes wander randomly in the crystal lattice with no preferred directions. There is no tendency for them to produce current flow in an external circuit. A pure silicon wafer, even in strong sunlight, cannot generate electricity and become a solar cell. What is needed is a mechanism to propel electrons and holes in opposite directions in the crystal lattice, forcing current through an external circuit and producing useful power. This mechanism is provided by one of the great inventions of the 20th century, the semiconductor p – n junction.